Learn by Observation: Imitation Learning for Drone Patrolling from Videos of A Human Navigator

This paper is accepted by IROS 2020.

Abstract

We present an imitation learning method for autonomous drone patrolling based only on raw videos. Different from previous methods, we propose to let the drone learn patrolling in the air by observing and imitating how a human navigator does it on the ground. The observation process enables the automatic collection and annotation of data using inter-frame geometric consistency, resulting in less manual effort and high accuracy. Then a newly designed neural network is trained based on the annotated data to predict appropriate directions and translations for the drone to patrol in a lane-keeping manner as humans. Our method allows the drone to fly at a high altitude with a broad view and low risk. It can also detect all accessible directions at crossroads and further carry out the integration of available user instructions and autonomous patrolling control commands. Extensive experiments are conducted to demonstrate the accuracy of the proposed imitating learning process as well as the reliability of the holistic system for autonomous drone navigation.

Video demonstration 1

Video demonstration 2 (long flight without user instruction)

Download

Paper:

arXiv

Auto-labeling using Inter-Frame Geometric consistency:

Code

Patrol Dataset:

Testing, Training

UAVPatrolNet:

Code

UAV Autonomous Navigation System for Patrol:

User interface of Ground Computing Unit, Data Transmission Unit

Quick Showcase

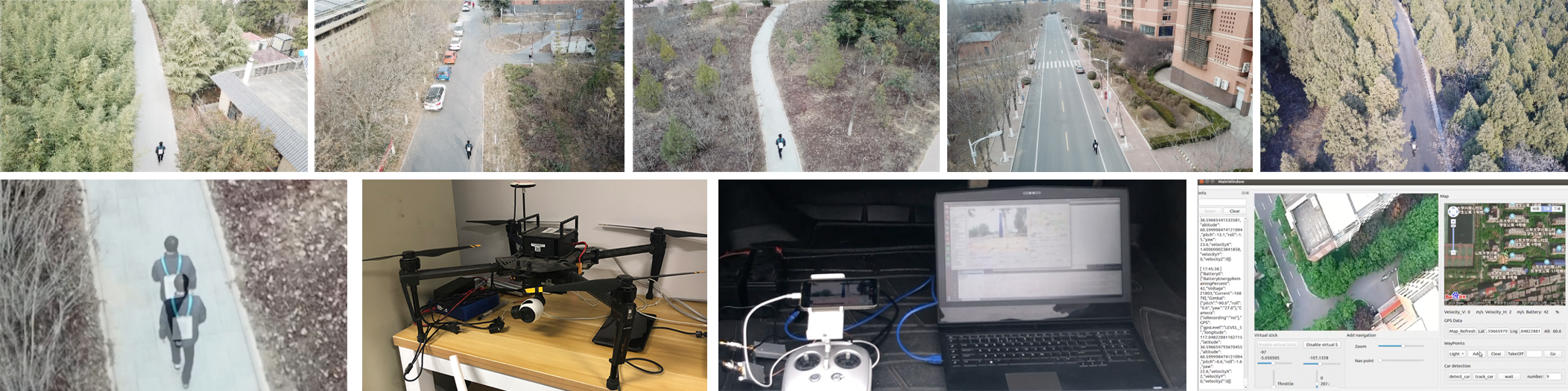

Real environment system we designed is separated into three parts: drone unit, computing unit, and data transmission unit.

User instructions are shown on the interface and affect the drone's flight path.

During data auto-labeling, we make use of the time correlation between adjacent frames to generate labels of the images. The navigator in two frames, tells what should be the patrolling direction and where the patrolling center is.

The dotted lines are the predicted Gaussian Mixture probability distribution. The sections of the dotted lines that are over the threshold are colored in green while others are in purple. Yellow straight line represents the control command sent to the drone.